Deep

neural networks,

or Perceptron vs dogs and cats

Many of us have seen a face transformed with FaceApp or President Obama's fake video, they're impressive. As impressive is to hear that they modeled verbal times of 1,000 languages, that prosthetics are controlled by a software that receives electrical muscle signals, or that judges in the United States cause debate with systems for predicting whether a candidate for bail will reoffend.

Somewhere in the news about these applications we always read or hear that they are the result of the use of deep learning, technology that is already a success factor for big companies and whose potential makes countries to announce strategies to be the vanguard in their development.

Qualitative

results of full-head reeanacment. ACM Transactions of graphics

Deep neural networks are the latest advancement in machine learning, the branch of Artificial Intelligence that is responsible for discovering patterns and relationships in data to make predictions or make decisions. Since the beginning of its 2012 boom, deep neural networks are behind advanced facial recognition systems, text creation by a computer, driving autonomous vehicles and the AlphaGo system that beat the world champion Go in 2016 (read our article on the subject).

Part of the great success of deep neural networks is because they can be used in activities that appear to be very different, but which correspond to the same type of problem, called classification in machine learning.

When we want to know what movement the prosthesis should perform in response to certain muscle signals, if an image is of a dog or a cat, or predict whether a person will reoffend, our goal is to classify each case into a category. In the first we want to determine which category of movement corresponds to the set of muscle signals, in the second if the image is of the category dog or cat, and in the third if the answer is of the category yes or no.

Neural networks "learn" to assign a category from thousands and thousands of examples that contain some chosen data (attributes) and the category in which the example is classified. During their "training", without any previously defined rule, neural networks find patterns and relationships in the attributes of the examples in each category. Such patterns and relationships are used to classify new examples.

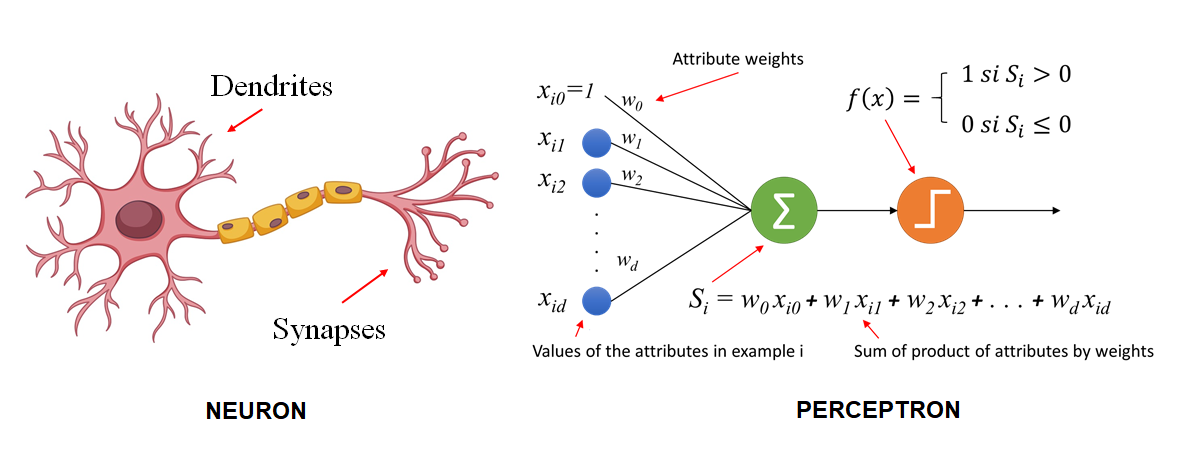

The first algorithm of this kind was implemented by Frank Rosenblatt in the 1950s using a device with electrical circuits and mechanical controls. The American psychologist named his project Perceptron. It had a design based on the functioning of brain neurons, which receive signals through their dendrites and, in response to certain combinations of them, emit signals through the synapses.

Perceptron receives the value of the attributes of an example, just as dendrites do in a neuron. Each attribute has a weight that measures its contribution to the final result, which is the sum of the multiplications of the value of each attribute by its corresponding weight. If the sum is greater than zero Perceptron returns a value of 1, otherwise it yields 0. Rosenblatt's algorithm found the values of the weights that made the result yielded by Perceptron match the correct category for the greatest number of examples. (Neurons have been found to perform a similar process, in which experience strengthens or weakens dendrites' connections)

The weights reflect patterns and relationship in the examples’ attributes. The problem was that the calculations that had to be done to get their value became very large as the number of attributes and the number of categories to be classified increased. Additionally, as with brain neurons, a network of connected neurons is much more powerful, leading to the design of neural networks that further complicated the model. Finally, there was no method to guide how to adjust the weights to get closer to the solution.

All these problems long exceeded the capacity of technology, so neural networks were no longer of interest to researchers. It was until 2012, when Geoffrey Hinton, a British-born researcher, led a team that swept through a computer vision competition with a multi-layered neural network model, which thus received the name deep neural network.

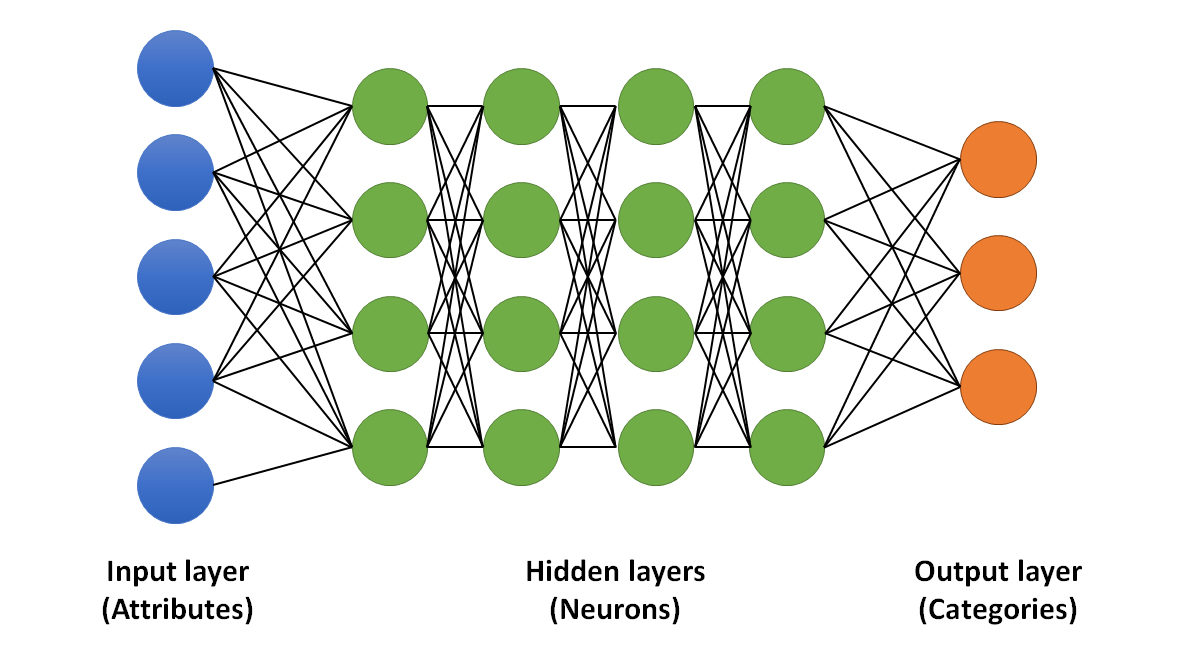

In these networks the attributes are received in the neurons of the first layer, each neuron with its own set of values for the weights of the attributes. The results of the first layer are the inputs of the second, also with their own weights, and so on until the final layer of categories in which the example can be classified. This model detects complex patterns and relationships in the attributes, so highly difficult classifications can be made, such as how much an autonomous vehicle should accelerate or deaccelerate depending on the category of road that corresponds to the image captured by the camera at the front (straight, curved, ascending, descending, etc.).

The 2012 model used for the first time a backropagation of error algorithm to automatically adjust the weights so that each setting would approach the solution. The algorithm, investigated by Hinton since the 1980s, traverses the network with the attribute values in an example, compares the results to the correct value, and goes back by adjusting the weights that contributed the most to the error in each layer. The adjusted weights are used with the next example in a cycle that is repeated until the examples are finished or the values of the weights no longer change.

This was the breakthrough that unleashed the revolution, as it allowed the learning of deep neural networks with multiple layers, tens or hundreds of them, each made up of hundreds or thousands of neurons, to be done automatically at high speed, only limited by the capacity of the computer equipment.

Today deep learning systems achieve results that exceed those that a human being can achieve in more and more activities, in addition to encouraging the permanent exploration of applications in new fields that are transforming many industries and economies. This thanks to the use of multiple graphics processing units operating in parallel (originally designed for games, but which proved very effective in making these calculations), network models with neurons that connect to others of the same layer or from previous layers, as well as the use of millions of examples.

Faced with this, there is no doubt that deep learning will mark a milestone in the history of artificial intelligence. Finally, in the battle of classification, Perceptron has defeated dogs and cats.

To remember:

Machine learning. A branch of Artificial Intelligence that is responsible for the discovery of patterns and relationships in the data to make predictions or make decisions.

Deep learning. Machine learning technique that uses deep neural networks to solve classification problems.

Classification. Machine learning technique in which, from thousands upon thousands of examples with attributes and the category in which each example is classified, patterns and relationships are found to classify new examples.

Deep neural networks. A model of connected neurons consisting of a layer that receives inputs, several layers of neurons connected to each other, and a final layer of outputs.

Retro-propagation. Iterative algorithm that, after each repetition, travels backwards a deep neural network adjusting the weights of the connections based on the contribution to the error.

.Did you enjoy this post? Read another of our posts here.