Ethics and Artificial Intelligence: From words to deeds

The advancement of Artificial Intelligence (AI) in recent years has been accompanied by a growing discussion of the ethical implications of its use. During 2019 several institutions published proposals for principles for the development and deployment of ethical AI and machine learning (ML) tools. These documents include the Montreal Declaration promoted by the University of that city, the European Commission's Ethical Guides for Trustworthy AI, the principles recommended by the OECD AI Board, as well as the final statement of the Robotics, Artificial Intelligence and Humanity workshop , organized by the Vatican.

These documents of principles join many more published by companies and organizations, in a trending that no one seems to want to stay out. There are so many that research papers to compare them are published and there is even a webpage to analyze topics and words included in them.

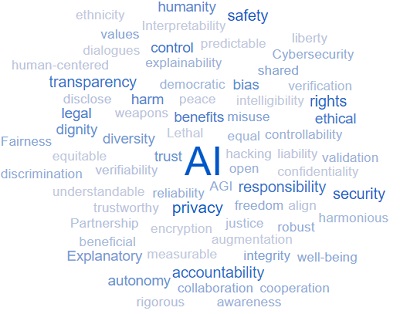

Image published in Linking Artificial Intelligence Principles

The image above is a word cloud of the nearly 30 early documents analyzed by a project of the Chinese Academy of Sciences and the Berggruen Institute of the United States. If we take the most used words and separate them into those related to the characteristics of the tool and those related to its development and use, we can get an idea of what we are looking for with these principles of ethics in AI.

|

Tool-related |

· Security · Control · Damage |

· Cybersecurity · Shared · Protection |

· Explanatory · Transparency |

|

Related to its development and use |

· Humanity · Values · Bias · Rights · Legality · Benefits |

· Misuse · Ethical · Dignity · Justice · Diversity · Confidence |

· Responsibility · Privacy · Accountability · Autonomy |

- AI tools are applied in domains with a high impact on people's lives. It’s no longer about recommending movies or identifying cats in photographs, it is about transporting passengers, hiring staff, authorizing credits or monitoring the population.

- The delegation of activities in a system to interact with human beings and/or make decisions with legal implications can create uncertainty about who is responsible and who should be accountable to the law, such as in self-driving vehicles accidents.

- The algorithms reveal and reproduce biases and prejudices that went unnoticed when the decision was made by a person, either because it was not explicit or because the number of decisions was smaller, such as discarding female candidates because women were not hired before.

- The use of AI tools amplifies the ability to influence people's preferences and can change our behavior, which generates discussions about how to keep people's freedom and autonomy in their decisions.

- Culture in technology companies, where results, speed and risk-taking are privileged, is not entirely compatible with the development of high-impact tools. They should look more like the pharmaceutical industry than the electronics industry.

According to the word cloud we saw earlier, it seems that we are looking for AI systems that are safe to use, with mechanisms to prevent damage and take control when they fail, protected against attacks, and that can transparent and explain their decisions. We want them to be developed and used for the benefit of humanity, without biases, with respect for the dignity, autonomy, diversity, rights and privacy of the person, as well as the rule of law, protected against misuse and with schemes of responsibility and accountability that build trust.

In this scenario, how to ensure the development and ethical use of AI tools? To this day, out of lack of knowledge and fear of limiting innovation, we have been operating with an approach of testing first and, if necessary, regulating afterwards. As life, the future or freedom of people comes into play, this approach begins to generate conflicts and weakens confidence in the industry, which is why the wide-ranging discussion of the subject comes to light.

Are all these individual principles statements and pronouncements by companies sufficient? My opinion is no. This taking into account that these statements reflect the particular vision of a company in a subject of public interest. In addition, not all statements include concrete measures, criteria for documenting and measuring compliance, as well as independent verification procedures.

In this context, the documents issued by the OECD and the European Commission, as well as the Montreal Declaration and the one from the workshop held at the Vatican, are an important step forward, as they provide elements for each country to build an enabling environment for the development and ethical use of IA , regardless of their values and beliefs. Similarly, they lay the foundation for an effort to create international rules for the development and deployment of trustworthy AI tools, which would facilitate their safe adoption and make their benefits available to a greater number of people.

To talk about ethics is to touch an intrinsically personal subject, so that any effort to establish principles of conduct of universal application is often disqualified. However, the personal character of ethical thinking and beliefs does not justify avoiding, nor is it obstacle to achieving, agreements on an issue such as developing and deploying AI and ML tools, which are already of critical importance to our future life.

To reinforce the above, it is worth quoting the Nobel Prize in economics Amartya Sen, who in his book "The Idea of Justice", in which he builds a theory of justice capable of absorbing different points of view, states that:

"For the emergence of a shared and beneficial understanding of many substantive issues of rights and duties (and also of just and unjust acts) there is no need to insist that we must have agreed to complete ordinances or universally accepted partitions of the just... ".

Did you enjoy this post? Read another of our posts here.