From lab to street with Artificial Intelligence, a whole different matter

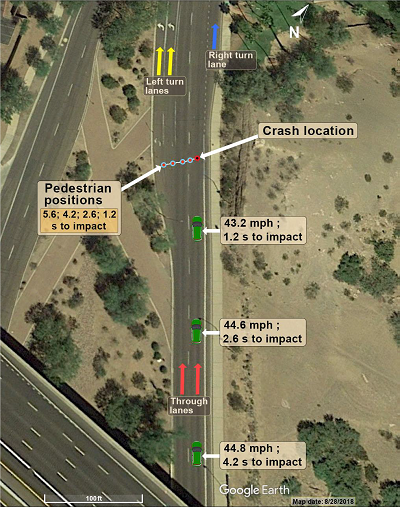

On March 18, 2018, an Uber SUV running in autonomous mode, with an operator as a backup in the driver's seat, struck and took Elaine Herzberg's life in Tempe, Arizona. The vehicle hit the 49-year-old woman as she crossed a four-lane road pushing a bicycle by her side, out of a designated crosswalk. Ms. Herzberg was taken to a hospital, where she unfortunately died from her injuries and thus became the first fatality in history of an accident with an autonomous vehicle.

The accident had a great media coverage and generated questions about how these new technologies are tested, which caused Uber, as well as other companies, to suspend their autonomous vehicle tests for a few months. Uber quickly reached a monetary agreement with the victim's family to avoid further legal problems, and Ms. Herzberg's family sued Tempe County. During an investigation of the case, the Yavapai (Arizona) County Attorney stated that there was no basis for Uber's criminal liability.

Being within its competence, the U.S. Government's National Transportation Safety Board (NTSB) investigated the accident. As part of its functions, NTSB investigates and determines the probable cause of air, sea, rail and road transport accidents, as well as pipelines. To do this, it applies a robust research methodology that, rather than assigning responsibilities, seeks to establish the causes and contributing factors of the accident to issue recommendations that avoid its repetition.

On 19 November 2019, the NTSB published its accident report, which highlights accident-related aspects that must draw the attention of authorities, companies and the general public to the protocols followed for the design, approval and execution of tests aimed at the deployment of Artificial Intelligence (IA) and Machine Learning (ML) technologies. This is equally important, both for the use of AI in machines operating in the physical world, and for systems that make high-impact decisions.

The NTSB concluded that the probable cause of the accident was the failure of the vehicle's backup operator to monitor the driving environment and the operation of the automated driving system. Because she was distracted with her cell phone, she did not take timely control of the vehicle when AI systems for image recognition and sensors failed to identify the pedestrian. The phenomenon of overconfidence in automated vehicle systems, even in much less advanced commercially available systems, is becoming a matter of concern.

The report points to five factors contributing to the accident, the first three attributable to an inadequate safety culture at Uber Technologies: (1) inadequate safety risk assessment procedures, (2) ineffective oversight of operators in vehicles, (3) lack of adequate mechanisms for addressing operators’ automation complacency, (4) the crossing of Mrs. Herzberg outside a pedestrian crossing, and (5) insufficient oversight of automated vehicle testing by the Arizona Department of Transportation.

Image with the position of Ms. Herzberg and the autonomous vehicle in the 5.6 seconds between detection and impact. Source: NTSB Accident Report

The report acknowledges that both Uber Technologies and the Arizona Department of Transportation have taken steps to strengthen their procedures and resolve findings. However, the content of the report confirms the need for some regulation to ensure that the design, testing and deployment of AI and ML tools that may have a significant impact or damage on people or their assets, meet certain standards that ensure the correct assessment and prevention of risks.

To the extent that more complex applications move from the lab to real life, technology companies need to adjust their priorities, in order to develop a culture of risk prevention adapted to the conditions that govern the activity in which their innovations will be applied, which is can be very different to the ones they’re used to. This process is not straightforward, given the internal and external pressures to achieve results, so it is a good thing to have mandatory rules and standards that complement internal policies, which are often vague.

The risks and effects of applications such as autonomous vehicles or any other machine operated with AI are much more evident, but also automating high-impact decisions with ML tools creates risks that must be prevented to avoid unintended consequences that reduce the benefits of technology or diminish confidence in it. In all cases, there are intelligent agents on which decisions are delegated to take actions that impact people (read our article on the subject).

Like any fatal consequence of an accident, the death of Elaine Herzberg is a tragedy. Even more because, as in most cases, investigations conclude that it could have been avoided. I hope that Ms. Herzberg can be remembered not as the first fatal victim of an accident with an autonomous vehicle, but as the case that led to strengthening measures to ensure safety in the testing and deployment of autonomous vehicles. That way we'd make sure his loss wasn't in vain.

Did you enjoy this post? Read another of our posts here.