Computer Vision: The World Through the Eyes of Artificial Intelligence

While all the senses contribute to our perception of reality, it is sight that makes us feel in contact with the world the most. With it we know and recognize, locate ourselves and guide our movements, appreciate beauty, read and write. Lack of vision makes us feel vulnerable and somewhat isolated from the reality around us. Although man has mechanisms to compensate for and fully live a blind life, in most species not seeing means death.

As a result of hundreds of millions of years of evolution, the sense of sight is a sophisticated system, perfectly interconnected and adapted to its environment, which in fraction of seconds receives a stimulus, transmits it, interprets it and generates a reaction. In this way, an animal decides whether to attack or flee, a baseball player hits a homerun and a surgeon can perform a delicate operation.

It is therefore amazing that in 60 years the technology has been developed to unlock our cell phone with the face or with the eyes, that the camera or video identifies and follows the faces or smiles,or that an application tells us what is the object that we aim at with the camera and where we can buy it. In fact, computer vision is one of the areas of Artificial Intelligence (AI) where the level of human performance has been overcome.

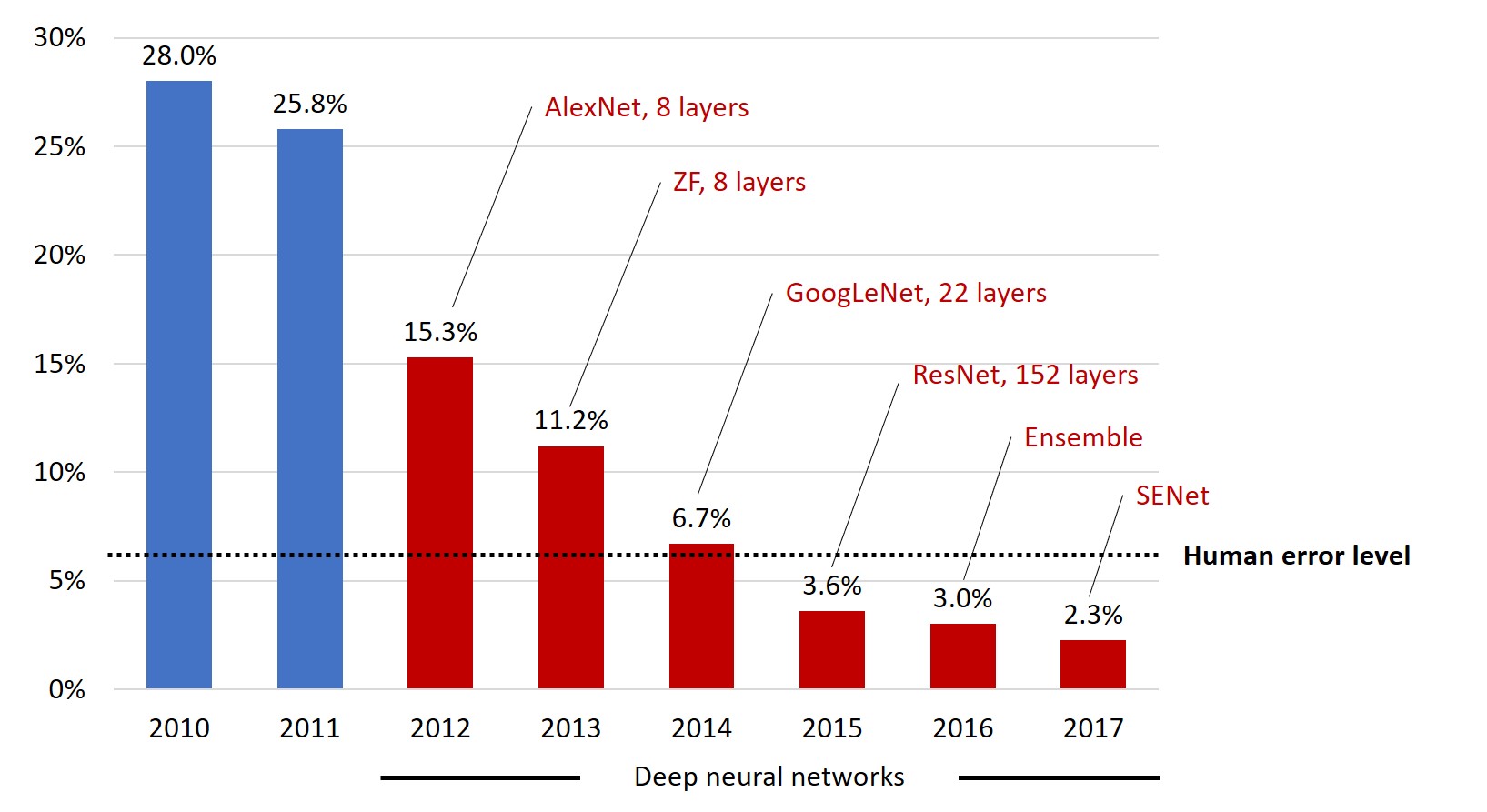

The graph below shows the evolution of the error rate in the task of

identifying the category corresponding to an image taken from an image database called ImageNet, which has 14 million images from more

than 21 thousand categories, and is used as a reference to evaluate algorithms

and systems through contests. In 2012, with the use of deep (multi-layered) neural

networks the percentage dropped 10 points in a year. From there, deeper neural

networks achieved ever-decreased error rates, surpassing human accuracy in

2015. (Read our article on how deep neural networks work)

The success achieved with the use of deep neural networks inspired the development of new techniques and models, which have generated extraordinary advances in the five processes linked to computerized vision:

- Image classification. Predict the categories or concepts that are applicable to an image.

- Semantic segmentation. Divide the image into well-defined regions, groups of pixels, which can be identified and labeled separately.

- Object detection. Identify which category the objects that appear in an image belong to, usually by framing and labeling them.

- Instantiation. Take segmentation to a higher level of detail, to identify different individuals of the same category, such as people or vehicles.

- Object tracking. Hold an identified object while moving in a video. It is a generalization of detection, segmentation, or instantiation, as a video is a series of images.

Images

originally published here

Images

originally published here

OBJECT TRACKING

The possibility of performing all these processes in real time, as well as the feasibility of deploying them on equipment such as a cell phone, have led to the widespread use of computer vision techniques in all kinds of applications, among which we can mention:

- The operation of autonomous vehicles. There is no doubt that new computer vision techniques, along with advances in reinforced learning technology, have been the determining factor in autonomous vehicles already being in their first commercial tests.

- Facial recognition for identification. It works well when used to identify a person from images of himself, such as on a cell phone. However, its large-scale use is disputed for the use non-representative example bases, which generate errors with high costs for individuals and by the invasion of privacy they represent.

- Biometric identification. Fingerprint or iris recognition is implemented very efficiently and easily on all types of devices, such as cellphones or portable devices.

- Interpretation of laboratory images, such as X-rays or CT scans, for the detection of tumors and other medical conditions. This is a task in which human performance has also been overcome.

- Identification of fractures, cracks and obstructions in ducts, ships, aircraft and structures, from videos or inspection images taken by robots in conditions inaccessible or unsafe to a person.

- Detection of suspicious behaviors in surveillance cameras, based on analysis of the movement of segmented instances. As with facial recognition, the main topic of discussion is the high cost that an error represents for the victim of it.

The revolution caused by the successful use of deep neural networks in computer vision, as well as in the interpretation of oral and written language, represents what Kai-Fu Lee, in his book "AI Superpowers ", calls the second wave of Intelligence Artificial, the wave of perception. The first wave was that of machine-learning data analysis, which led to the automation of decision-making in many fields (read our article on the subject). The third wave will be that of autonomy, in which the techniques of the previous two will allow an intelligent agent to effectively perceive his environment, make decisions and act for himself in a complex environment.

In this way, we are fast approaching a time when powerful AI and machine learning tools will be at our disposal to augment our capabilities and produce benefits for society at large. The fact that AI systems outperform humans in certain tasks does not pose a threat by itself, since the risks do not come from a good interpretation of an image or a series of data, but from what is done with such an interpretation.

Did you enjoy this post? Read another of our posts here.